@inproceedings{yu-etal-2024-eliciting,

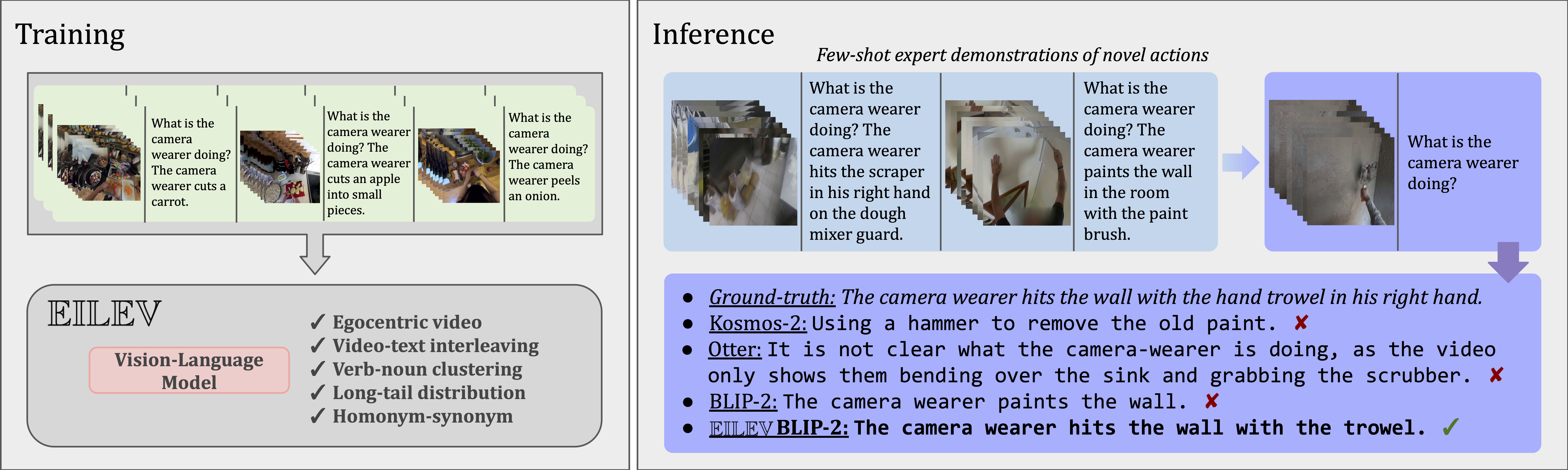

title = "Eliciting In-Context Learning in Vision-Language Models for Videos Through Curated Data Distributional Properties",

author = "Yu, Keunwoo and

Zhang, Zheyuan and

Hu, Fengyuan and

Storks, Shane and

Chai, Joyce",

editor = "Al-Onaizan, Yaser and

Bansal, Mohit and

Chen, Yun-Nung",

booktitle = "Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2024",

address = "Miami, Florida, USA",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.emnlp-main.1137",

pages = "20416--20431",

}This work was supported in part by the DARPA PTG program HR00112220003. We would like to thank the entire MSPR team for their helpful discussion and feedback. We would also like to thank the anonymous reviewers for their valuable comments and suggestions.